We presented MayaNAS and MayaScale at OpenZFS Developer Summit 2025 in Portland, Oregon. The centerpiece of our presentation: objbacker.io—a native ZFS VDEV implementation for object storage that bypasses FUSE entirely, achieving 3.7 GB/s read throughput directly from S3, GCS, and Azure Blob Storage.

Presenting at OpenZFS Summit

The OpenZFS Developer Summit brings together the core developers and engineers who build and maintain ZFS across platforms. It was the ideal venue to present our approach to cloud-native storage: using ZFS's architectural flexibility to create a hybrid storage system that combines the performance of local NVMe with the economics of object storage.

Our 50-minute presentation covered the complete Zettalane storage platform—MayaNAS for file storage and MayaScale for block storage—with a deep technical dive into the objbacker.io implementation that makes ZFS on object storage practical for production workloads.

The Cloud NAS Challenge

Cloud storage economics present a fundamental problem for NAS deployments:

$96K/year

100TB on EBS (gp3)

$360K/year

100TB on AWS EFS

The insight that drives MayaNAS: not all data needs the same performance tier. Metadata operations require low latency and high IOPS. Large sequential data needs throughput, not IOPS. ZFS's special device architecture lets us place each workload on the appropriate storage tier.

objbacker.io: Native ZFS VDEV for Object Storage

The traditional approach to ZFS on object storage uses FUSE-based filesystems like s3fs or goofys to mount buckets, then creates ZFS pools on top. This works, but FUSE adds overhead: every I/O crosses the kernel-userspace boundary twice.

objbacker.io takes a different approach. We implemented a native ZFS VDEV type (VDEV_OBJBACKER) that communicates directly with a userspace daemon via a character device (/dev/zfs_objbacker). The daemon uses native cloud SDKs (AWS SDK, Google Cloud SDK, Azure SDK) for direct object storage access.

Architecture Comparison

| Approach | I/O Path | Overhead |

|---|---|---|

| FUSE-based (s3fs) | ZFS → VFS → FUSE → userspace → FUSE → VFS → s3fs → S3 | High (multiple context switches) |

| objbacker.io | ZFS → /dev/zfs_objbacker → objbacker.io → S3 SDK | Minimal (direct path) |

How objbacker.io Works

objbacker.io is a Golang program with two interfaces:

- Frontend: ZFS VDEV interface via

/dev/zfs_objbackercharacter device - Backend: Native cloud SDK integration for GCS, AWS S3, and Azure Blob Storage

ZIO to Object Storage Mapping

| ZFS VDEV I/O | /dev/objbacker | Object Storage |

|---|---|---|

| ZIO_TYPE_WRITE | WRITE | PUT object |

| ZIO_TYPE_READ | READ | GET object |

| ZIO_TYPE_TRIM | UTRIM | DELETE object |

| ZIO_TYPE_IOCTL (sync) | USYNC | Flush pending writes |

Data Alignment

With ZFS recordsize set to 1MB, each ZFS block maps directly to a single object. Aligned writes go directly as PUT requests without caching. This alignment is critical for performance—object storage performs best with large, aligned operations.

bucket/00001, bucket/00002, etc.

Validated Performance Results

We presented benchmark results from AWS c5n.9xlarge instances (36 vCPUs, 96 GB RAM, 50 Gbps network):

3.7 GB/s

Sequential Read from S3

2.5 GB/s

Sequential Write to S3

The key to this throughput: parallel bucket I/O. With 6 S3 buckets configured as a striped pool, ZFS parallelizes reads and writes across multiple object storage endpoints, saturating the available network bandwidth.

FIO Test Configuration

| ZFS Recordsize | 1MB (aligned with object size) |

| Block Size | 1MB |

| Parallel Jobs | 10 concurrent FIO jobs |

| File Size | 10 GB per job (100 GB total) |

| I/O Engine | sync (POSIX synchronous I/O) |

MayaScale: High-Performance Block Storage

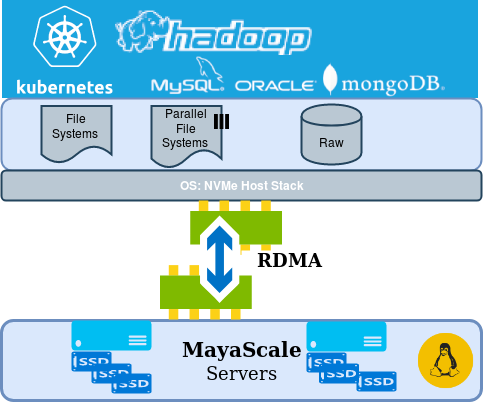

We also presented MayaScale, our NVMe-oF block storage solution for workloads requiring sub-millisecond latency. MayaScale uses local NVMe SSDs with Active-Active HA clustering.

MayaScale Performance Tiers (GCP)

| Tier | Write IOPS (<1ms) | Read IOPS (<1ms) | Best Latency |

|---|---|---|---|

| Ultra | 585K | 1.1M | 280 us |

| High | 290K | 1.02M | 268 us |

| Medium | 175K | 650K | 211 us |

| Standard | 110K | 340K | 244 us |

| Basic | 60K | 120K | 218 us |

Multi-Cloud Architecture

Both MayaNAS and MayaScale deploy consistently across AWS, Azure, and GCP. Same Terraform modules, same ZFS configuration, same management interface. Only the cloud-specific networking and storage APIs differ.

| Component | AWS | Azure | GCP |

|---|---|---|---|

| Instance | c5.xlarge | D4s_v4 | n2-standard-4 |

| Block Storage | EBS gp3 | Premium SSD | pd-ssd |

| Object Storage | S3 | Blob Storage | GCS |

| VIP Migration | ENI attach | LB health probe | IP alias |

| Deployment | CloudFormation | ARM Template | Terraform |

Watch the Full Presentation

The complete 50-minute presentation is available on the OpenZFS YouTube channel:

Note: Video will be available once published by OpenZFS. Check the OpenZFS YouTube channel for the recording.

Presentation Highlights

- 0:00 - Introduction and Zettalane overview

- 5:00 - Zettalane ZFS port architecture (illumos-gate based)

- 12:00 - The cloud NAS cost challenge

- 18:00 - MayaNAS hybrid architecture with ZFS special devices

- 25:00 - objbacker.io deep dive: native VDEV implementation

- 35:00 - Performance benchmarks on AWS

- 42:00 - MayaScale NVMe-oF block storage

- 48:00 - Q&A and future directions

Getting Started

Deploy MayaNAS or MayaScale on your preferred cloud platform:

Conclusion

Presenting at OpenZFS Developer Summit 2025 gave us the opportunity to share our approach with the community that makes ZFS possible. The key technical contribution: objbacker.io demonstrates that native ZFS VDEV integration with object storage is practical and performant, achieving 3.7 GB/s throughput without FUSE overhead.

MayaNAS with objbacker.io delivers enterprise-grade NAS on object storage with 70%+ cost savings versus traditional cloud block storage. MayaScale provides sub-millisecond block storage with Active-Active HA for latency-sensitive workloads. Together, they cover 90% of enterprise storage needs on any major cloud platform.

Special thanks to the OpenZFS community for the foundation that makes this possible.

Ready to deploy cloud-native storage?

Contact Us

Download