We achieved a major milestone: 2.3 million IOPS with 192 microsecond latency on Google Cloud Platform. This validated performance demonstrates that cloud storage can deliver local-SSD speeds while maintaining enterprise-grade high availability.

Breaking the 2M IOPS Barrier

In October 2025, we ran comprehensive SNIA-compliant FIO benchmarks on Google Cloud's n2-highcpu-64 instances with local NVMe SSDs. The results exceeded our expectations and set a new benchmark for what's possible with cloud storage.

Validated Achievement

2.3 million read IOPS with sub-200 microsecond latency, achieved on standard Google Cloud infrastructure using n2-highcpu-64 instances with local SSDs and 100 Gbps networking.

The Numbers

Real Application Performance

What matters most is single-threaded (QD1) performance—this is what your applications actually experience:

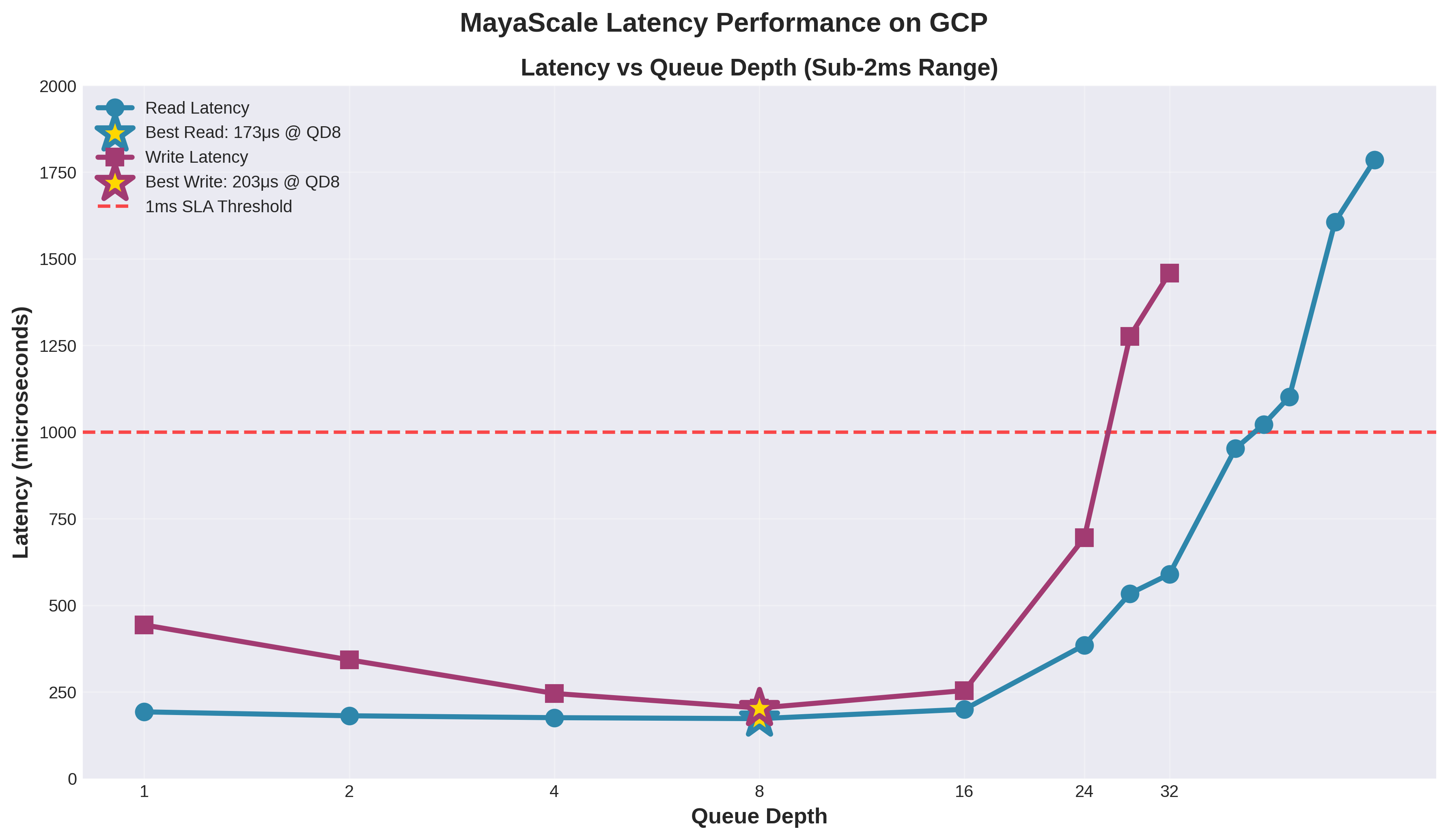

Latency Performance Curve

The latency performance curve shows how MayaScale maintains sub-millisecond latency across different queue depths. Best read latency of 173μs achieved at QD8, and write latency of 203μs also at QD8:

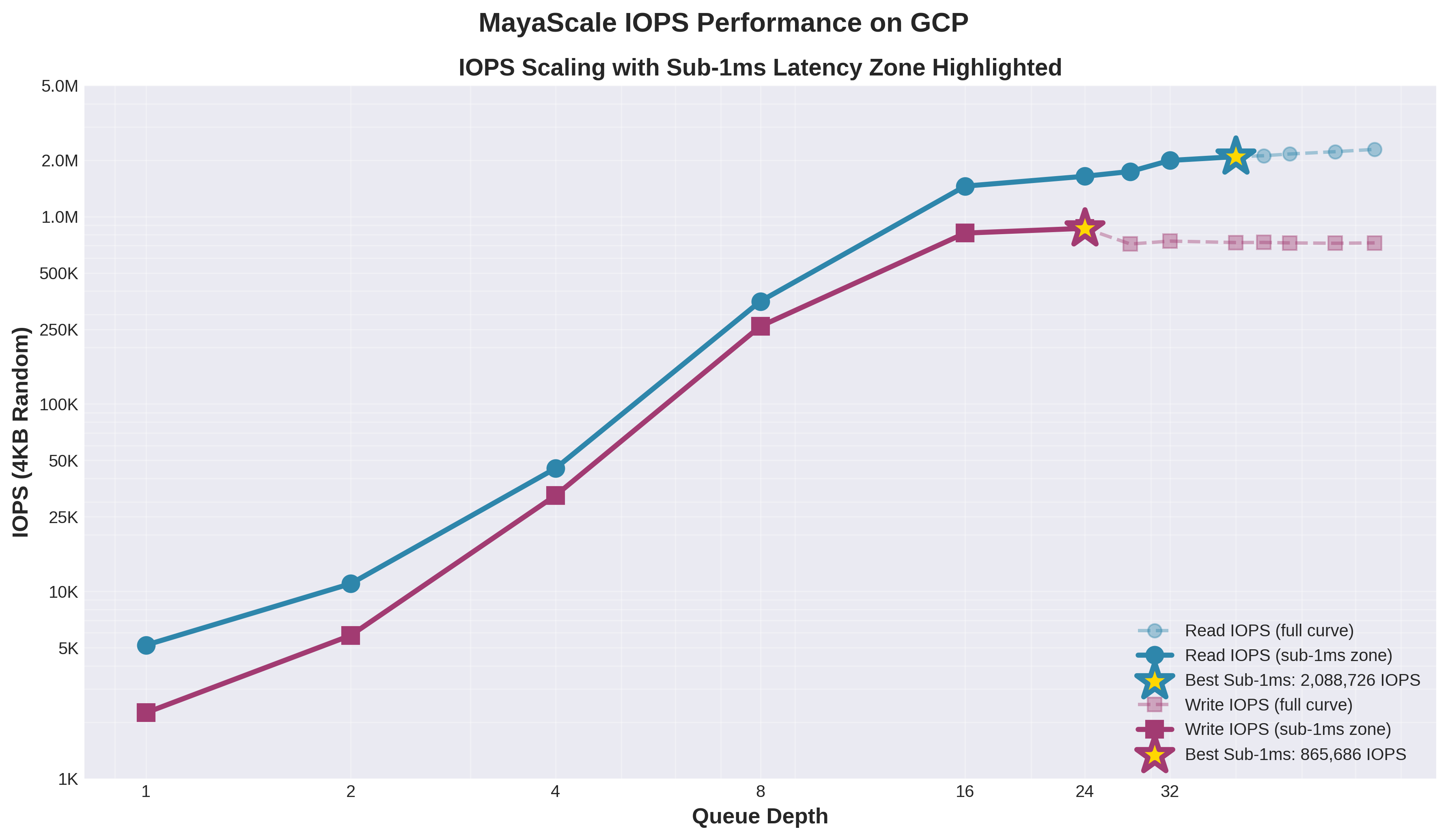

Maximum Validated IOPS

For highly parallel workloads that can leverage multiple threads, MayaScale delivers extraordinary throughput. The performance curve shows IOPS scaling with queue depth, with the sub-1ms zone clearly highlighted:

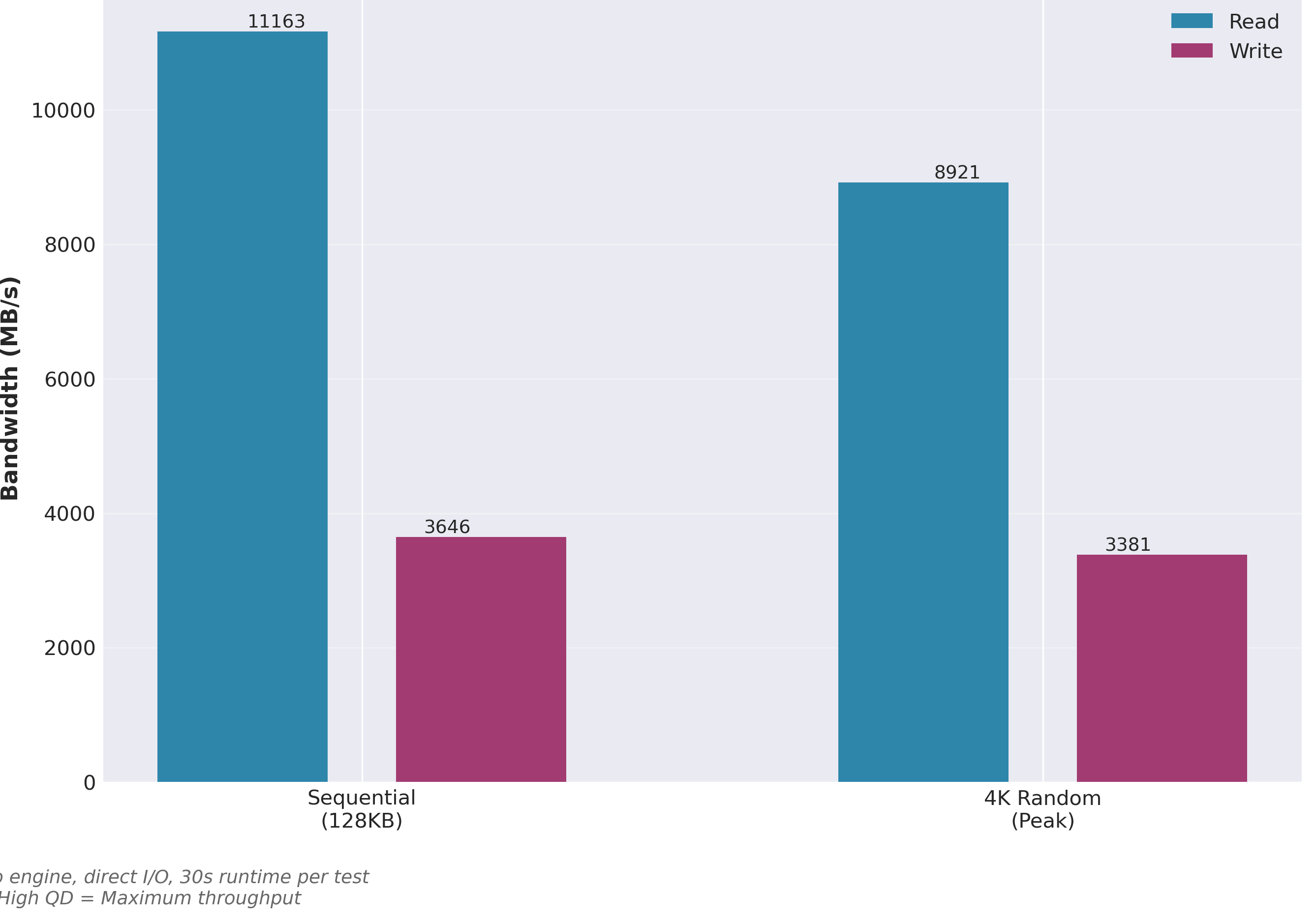

Sequential Bandwidth Performance

In addition to exceptional random I/O performance, MayaScale delivers outstanding sequential throughput for large-block workloads:

Click to enlarge - Sequential vs 4K Random bandwidth comparison

Note: Write bandwidth is limited by synchronous replication—data must be written to both nodes before acknowledgment. This ensures zero data loss during failover while still delivering 3.6 GB/s sustained throughput.

How We Achieved This

Hardware Configuration

We used Google Cloud's highest-performance compute instances:

- Instance Type: n2-highcpu-64 (64 vCPU, 64 GB RAM)

- Local Storage: 16x local NVMe SSDs per node (375 GB each)

- Total Raw Capacity: 12 TB across 32 drives (2 nodes)

- Usable Capacity: 6 TB with Active-Active HA

- Network: 100 Gbps tier-1 networking

- Location: us-central1-f (same zone deployment)

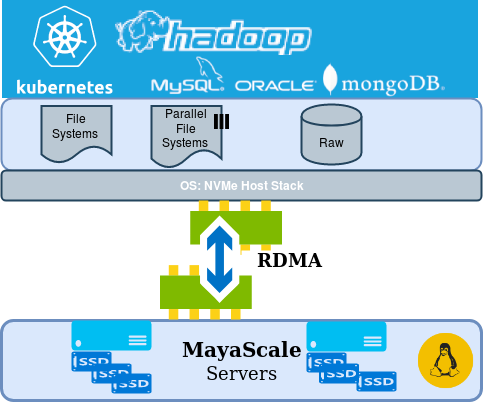

Software Architecture

MayaScale uses NVMe-over-Fabrics (NVMe-oF) to pool local NVMe storage across instances, creating shared storage that maintains near-local performance:

- Protocol: NVMe-over-TCP for cloud-native shared storage

- Active-Active Clustering: Both nodes serve I/O simultaneously

- Synchronous Replication: Data written to both nodes before acknowledgment

- Dual-NIC Architecture: Separate networks for client I/O and replication

- Sub-second Failover: Automatic recovery with no data loss

Testing Methodology

All performance numbers were validated using SNIA-compliant FIO benchmarks:

- 4KB random read and write tests

- Queue depths from 1 to 64

- Multiple job counts (1 to 64 threads)

- Direct I/O to bypass OS cache

- Multiple test runs for consistency

What This Means

This level of performance opens up new possibilities for cloud workloads that were previously confined to on-premises infrastructure:

High-Performance Databases

Run PostgreSQL, MySQL, Oracle with sub-200μs latency. Support hundreds of thousands of transactions per second with minimal wait times.

Real-Time Analytics

Process streaming data with microsecond-level query latency. Support real-time dashboards and operational analytics at massive scale.

AI/ML Training

Eliminate data loading bottlenecks for GPU and TPU workloads. Support distributed training with fast shared dataset access.

NoSQL at Scale

Run Cassandra, ScyllaDB, MongoDB with millions of operations per second. Low-latency reads for real-time applications.

Deployment and Access

This level of performance is available through MayaScale's Ultra tier on Google Cloud:

- Terraform Deployment: Infrastructure-as-Code with policy-based instance selection

- Policy Name: "zonal-ultra-performance" automatically selects optimal configuration

- Deployment Time: Typically under 15 minutes for full Active-Active cluster

- Client Support: Linux (RHEL, Ubuntu, Debian) with NVMe-oF drivers

- Kubernetes Integration: CSI driver available for GKE deployments

Sample Terraform Configuration

module "mayascale_ultra" {

source = "github.com/zettalane/terraform-gcp-mayascale"

cluster_name = "ultra-cluster"

performance_policy = "zonal-ultra-performance"

zone = "us-central1-f"

project_id = "your-project-id"

# Automatically deploys:

# - 2x n2-highcpu-64 instances

# - 32x local NVMe SSDs (16 per node)

# - Active-Active HA configuration

# - Dual-NIC networking

}Other Performance Options

MayaScale offers five performance tiers on Google Cloud, all with sub-millisecond latency and Active-Active HA:

- Basic: 100K IOPS - Development and testing

- Standard: 380K IOPS - General purpose applications

- Medium: 700K IOPS - Production databases

- High: 900K IOPS - High-performance databases

- Ultra: 2.3M IOPS - Maximum performance workloads

See our MayaScale on GCP page for detailed tier comparisons and performance graphs for all tiers.

Looking Forward

Achieving 2.3 million IOPS with 192 microsecond latency on standard Google Cloud infrastructure demonstrates that the performance gap between cloud and on-premises storage is closing rapidly. With the right architecture and technology, cloud storage can deliver performance that rivals—and in some cases exceeds—traditional datacenter deployments.

This breakthrough enables a new generation of cloud-native applications that demand both extreme performance and enterprise-grade availability. Whether you're running high-frequency trading systems, real-time analytics, or AI/ML training workloads, this level of performance is now accessible on public cloud infrastructure.

Experience 2M+ IOPS Performance

Deploy MayaScale Ultra tier on Google Cloud and validate the performance for yourself. Free trial available.

Download Free Trial View All Tiers